OEMs Facing Final Exam Harken to Domain-Specific AI

Bosch has shown that it’s potentially possible for a conventional sensor combo to pass the AEB/P-AEB tests with the help of domain-specific AI algorithms.

The new radar sensors use artificial intelligence for improved perception (Image: Bosch)

DETROIT —Robert Bosch, the world's leading Tier 1 automotive supplier, this week came to the AutoSens automotive technology conference to unveil potentially an effective solution for automotive OEMs facing a federal deadline to upgrade Automatic Emergency Braking (AEB) systems.

Bosch says it can potentially reach the target requirements — with a combination of conventional camera and radar with no additional sensor detection —to brake to meet the upcoming FMVSS 127 mandate.

The new rule includes pedestrian AEB (P-AEB) to spot pedestrians during the day and night and automatically apply braking before hitting them.

The key to Bosch’s solution, which requires no additional sensors, is a small, domain-specific AI model Bosch has trained on its existing camera/radar combo, according to Elizabeth Kao, Bosch’s director of ADAS product management. Some of those sensors used for training were one or two generations old, she added.

“We were able to demonstrate that a variety of vehicles, not just small passenger cars but also pickup trucks with mass and no added sensors” can automatically stop before hitting a vehicle or pedestrian, passing FMVSS 127 tests.

FMVSS 127 stipulates that all passenger cars and light trucks must be able to automatically brake at up to 90 mph when a collision with a lead vehicle is imminent, and at up to 45 mph when a pedestrian is detected in daylight or nighttime.

For Bosch, the mission was clear: enable automakers to meet the September 2029 deadline affordably and quickly for the AEB/P-AEB rules set last year by the National Highway Traffic Safety Administration (NHTSA).

What about thermal sensors?

Pierrick Boulay, senior technology and market analyst for automotive semiconductors at Yole Group, who attended the conference, noted, “To me, [Bosch’s development] was big news, given that thermal imaging companies have been heavily lobbying the industry to add one more sensor to the mix so that carmakers pass the FMVSS 127 tests.”

But, if OEMs can stick with just camera and radar, why add another sensor? What they want to avoid is a development cost hike that they would likely pass onto customers.

“I think Bosch just nipped in the bud all thermal imaging companies’ hopes for a large automotive market,” said Boulay. “I do not say that thermal cameras will not be used for AEB but that it would remain a niche.”

Domain-specific AI

The automotive industry is on the cusp of adopting a variety of AI models to their vehicles.

Carmakers have discussed using large language models (LLM) to develop effective natural language-based HMI.

With Generative AI, some are even attempting to develop end-to-end (E2E) AI models, which combine perception, prediction and planning into a single neural network.

However, most carmakers haven’t yet bought the notion that generative AI can solve the world’s automated vehicle problems.

Instead, what emerged as a running theme at this year’s AutoSens in Detroit was the development of domain-specific AI models to aggregate sensor performance.

Kao explained, “We started this [the development of AI models trained on sensors] as a proof-of-concept project.” A parallel program with another team explored new sensors, including thermal and infrared.

The AI-model training, said Kao, took less than six months. However, Bosch’s team conducted exhaustive tests under many different light conditions and scenarios. It used humans and dummies — moving and standing still — on a test course. People, even when standing still, she said, are easier to detect because they produce a micro-doppler effect.

How much processing power and memory are needed?

The trained AI model was clearly small enough to run on the test vehicles’ existing ECUs.

Kao explained, “The initial proof-of-concept demonstrating AEB functionality with existing Bosch camera and radar sensors was run directly on the camera itself, without the need for an external ECU.”

She added, “This was achieved using a chip with integrated AI capabilities and a neural network engine, co-developed by Bosch and a chip supplier. This integrated approach minimizes system complexity and latency.”

Overall processing power in ADAS is growing.

Kao explained, “Our current generation smart camera, demonstrated at AutoSens, utilizes less than 4 TOPS for the entire ADAS system. For our next-generation smart camera, we anticipate needing approximately 10 TOPS to run the complete ADAS software stack. This increase reflects the added complexity and enhanced capabilities of the next-generation system.”

Bosch would not say exactly how much processing power and memory are needed to run Bosch’s domain-specific AI algorithm designed for AEB.

Kao explained, “As we are not developing and testing AEB in isolation, but as part of a comprehensive ADAS suite, it’s difficult to estimate … Memory requirements will likely increase in line with the processing power demands.”

Because FMVSS 127 requires even low-end cars to pass the tests, Boulay suspects that Bosch is not promoting a high-end processor and lots of memory. “The goal is still to be affordable,” he stressed.

Implementation

During the interview, Kao mentioned repeatedly that Bosch has already shipped 100 million automotive radars, positioning itself as a tier one not just expert but as a well-established sensor supplier in the automotive market.

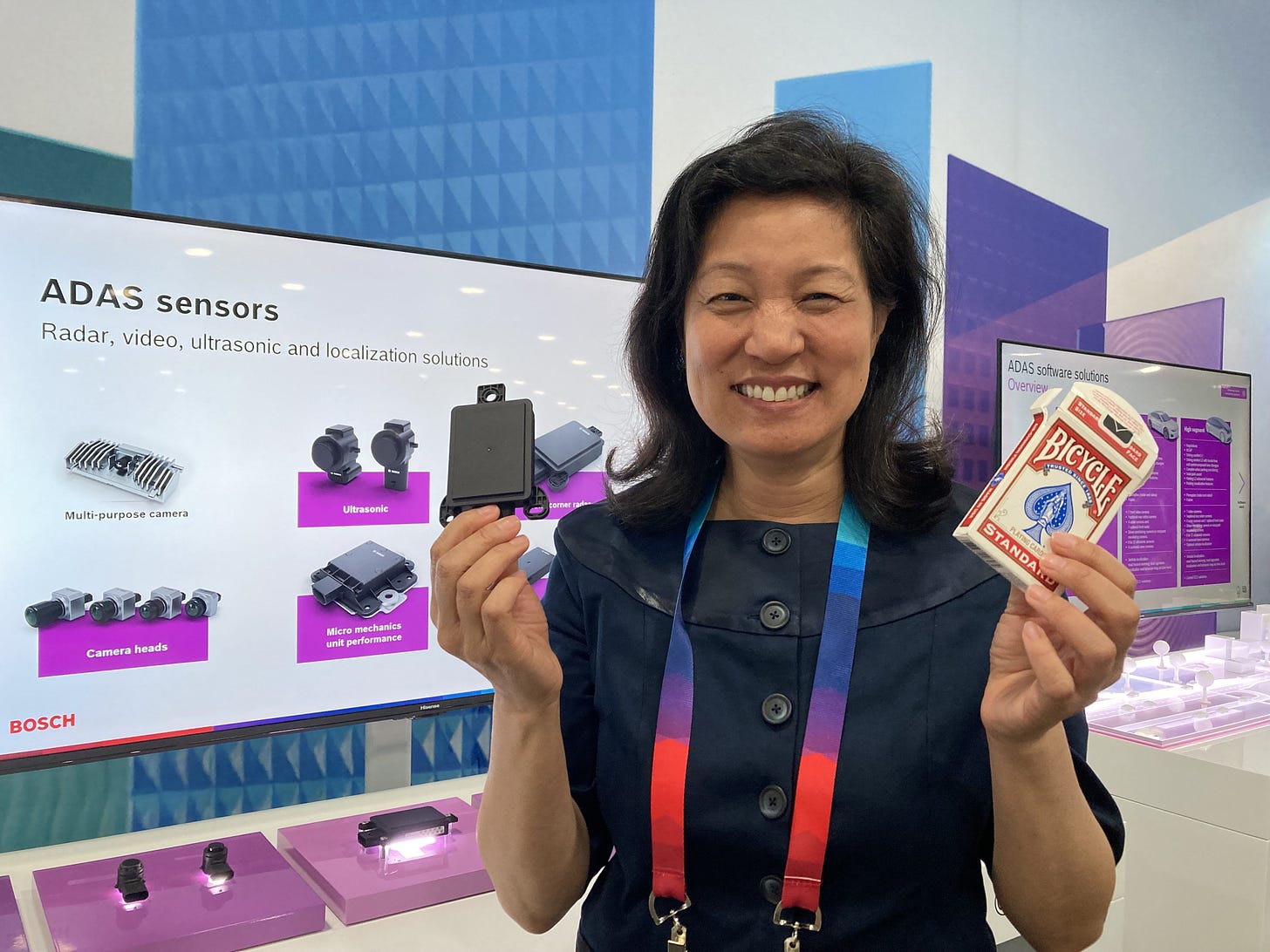

Elizabeth Kao shows off a new generation of Bosch radar, a size of a standard deck of playing cards. (Image: Junko Yoshida)

Boulay explained that Bosch developed their AEB solution using Gen 5 of their radar (today, it is Gen 7) and the previous generation of their camera. “Starting from that they trained their algorithm in low light conditions to fulfill the requirements.”

As Bosch encourages OEMs to use its solution, carmakers will have the freedom to go with the cameras and radars of their choice, abetted by Bosch’s AI algorithm. Depending on the cameras and radars chosen, the type of SoC teamed with the sensor, the available processing power in domain controllers, and where the algorithm runs may need some tuning. But that’s an adjustment tier one commonly offers to OEMs.

If it has to tune different vehicle architectures, can Bosch manage to scale support as its AI-driven AEB solution proliferates?

Kao said, “We understand that OEMs have varying vehicle architectures and levels of in-house expertise … While some OEMs require different levels of collaboration than others, our platform solutions are designed to address common requirements across various architectures.”

Alternatives?

Safety experts view NHTSA’s new AEB/P-AEB as “one of the best regulations” of recent years. Phil Koopman, professor at Carnegie Mellon Univ. praised NHTSA for “holding the industry’s feet to the fire.”

Nonetheless, some industry observers were puzzled by a comment in the ruling that reads: “NHTSA estimates that systems can achieve the requirements of this final rule primarily through upgraded software, with a limited number of vehicles needing additional hardware.”

One concern widely discussed last year was that spotting a pedestrian under roughly full-moon illumination, without adding a new sensor, would not be easy.

The challenges created by the AEB/P-AEB rule, however, have opened opportunities for startups focused on developing software that can be layered on top of existing hardware. This includes such newcomers as Neural Propulsion Systems (NPS) of Pleasanton, Calif., and PercivAI, a spin-off of Delft University of Technology’s Intelligent Vehicles Group.

Today, an established tier one in the automotive industry, such as Bosch, has shown that it’s possible for conventional camera and radar sensors to pass the AEB/P-AEB tests with the help of domain-specific AI algorithms.

Is FMVSS 127 on track?

For FMVSS 127, the goal is that by 2029, all cars sold in the US will meet the NHTSA requirements. Boulay said, “That means that while high-end cars, for which adding one more sensor might not be so much an issue, low-end cars will face challenges. Each new feature is a new cost pressure for the end-user.”

Despite concerns that the new White House regime might delay enforcement, “the Trump administration reviewed this regulation earlier in March, and so far, it is still on track for 2029,” said Boulay.

“2029 will come rapidly and designs will be frozen in 2026-2027 at last. That means that OEMs and tier ones still need to be prepared,” he added.

Competitors

The industry continues to add AI models to improve the performance of sensors or sensor fusions. Tier one Magna talked about the aggregation of sensor performance. Compal discussed AI-integrated infrared sensing technology at the conference.

But Bosch was alone in publicly announcing development of an AI model that enables conventional camera and radar sensors to pass their final exams.