AI’s Next Move: From Hype to Humdrum, Homework to Real Work

It’s time for AI to move from toys to tools. What are MCU/MPU vendors doing to sell billions of AI chips for practical consumer and industrial applications?

NUREMBERG — “Does your chip run LLM?” is the question every reporter asked here at the Embedded World conference in 2024. The world wanted to know if lowly MCUs and MPUs could handle—without going to the cloud—the inferences of rapidly changing, super hyped-up AI models.

This week in Nuremberg, however, the conversation on AI has turned decidedly humdrum.

Participants this year wanted to know how quickly they can put AI to work in countless industrial applications from pharmaceutical manufacturing to rotors in electric motors.

Real-time inspection

At the Synaptics booth, 42 Technology (Cambridge, UK), a product design and technology consultancy, featured a real-time inspection demonstrator for pharmaceutical manufacturing. 42 Technology assembled the system by combining Balluff’s high-performance image acquisition system and Arcturus’s deep-learning models running on Synaptics’s Astra edge AI processor. The system detects contaminants in pills along with objects that don’t belong on the line. Then it verifies line clearance status.

But isn’t “anomaly detection” in manufacturing a mundane process that computer vision cameras should have solved decades ago?

When asked, Jamie Jeffs, 42 Technology’s director of Industrial Instrumentation, explained that installing the many high-resolution cameras required at every manufacturing step has always been very expensive. With pre-AI era Machine Vision systems with no deep learning models and analytics, somebody had to program every single defect that could happen, and run it on big computer systems.

Rapid inspection matters to drug companies. Regulatory compliance required in pharmaceutical manufacturing demands inspections at every step of production. Manually, “it takes at least 40 to 60 minutes for every check,” noted Jeffs.

Beyond real-time inspection and on-device sensing capability (critical to security and safety), data generation also matters. Essential to any instrumentation system is the ability to produce valuable data and present it meaningfully, explained Jeffs. “When your manufacturing process involves hundreds of different suppliers, imagine the complexity of managing data on the cloud services,” he said. Each supplier processing its data on their cloud needs to pass it onto your internal site, he added.

What is AI for?

AI is often discussed abstractly. How “smart” is the AI and how does it improve “productivity”?

But for industrial applications, “cost savings” have become one of the most effective pitches to lure AI customers.

Take examples of washing machines, tire pressure and rotors. STMicroelectronics explained that AI could be important in reducing the cost of embedded systems that rely for data on multiple heavy, expensive sensors. Using AI “as a virtual sensor,” some systems could pare the sensor load, said Patrick Aidoune, group vice president, general purpose MCU division at ST.

When a washing machine spins and stops, it needs to know whether the drum is up or down. “Using AI, we were able to deduce the position of the drum from the electrical currents,” said Gerard Cronin, ST’s marketing communications vice president. This eliminates standalone electrical current sensors.

A rotor, the moving component of an electric motor, rotates due to interaction between the winding and magnetic fields, producing torque around the rotor's axis. “You want to measure the temperature of the rotor because it tells you if you are driving too fast,” said Cronin.

But instead of trying to measure heat with a sensor inside the rotor, which you can’t, “you can measure the external temperature of the rotor, and you can infer what the internal temperature is.” AI becomes an additional sensor the systems didn’t have before.

Similar principles apply to tires. One can run an AI application on an MCU to infer the air pressure without pressure sensors.

Big AI, little AI

Considering AI used inside vehicles, some are huge, such as AI-enabled vision systems (i.e. Mobileye, Nvidia, etc.)

But as Aidoune noted, AI can apply to systems driving the motor, checking tire pressure, opening and closing windows, etc. “They will also have AI, because each system will function better with AI,” said Aidoune. These are the MCUs ST is planning to sell in billions in coming years.

DeepSeek in action

NXP Semiconductors showed off DeepSeek demonstrations at their booth.

One demo running the DeepSeek-R1-Distill-Llama 3.1-8B on NXP’s i.MX 8M Plus (application processor) and Kinara Ara-2 (neural processing unit) showcased DeepSeek’s reasoning ability. (NXP announced the acquisition of Kinara last month.) Asked how many of the letter “r” are in the word “strawberry,” DeepSeek’s AI model explained, somewhat cumbersomely, how it reached the number three.

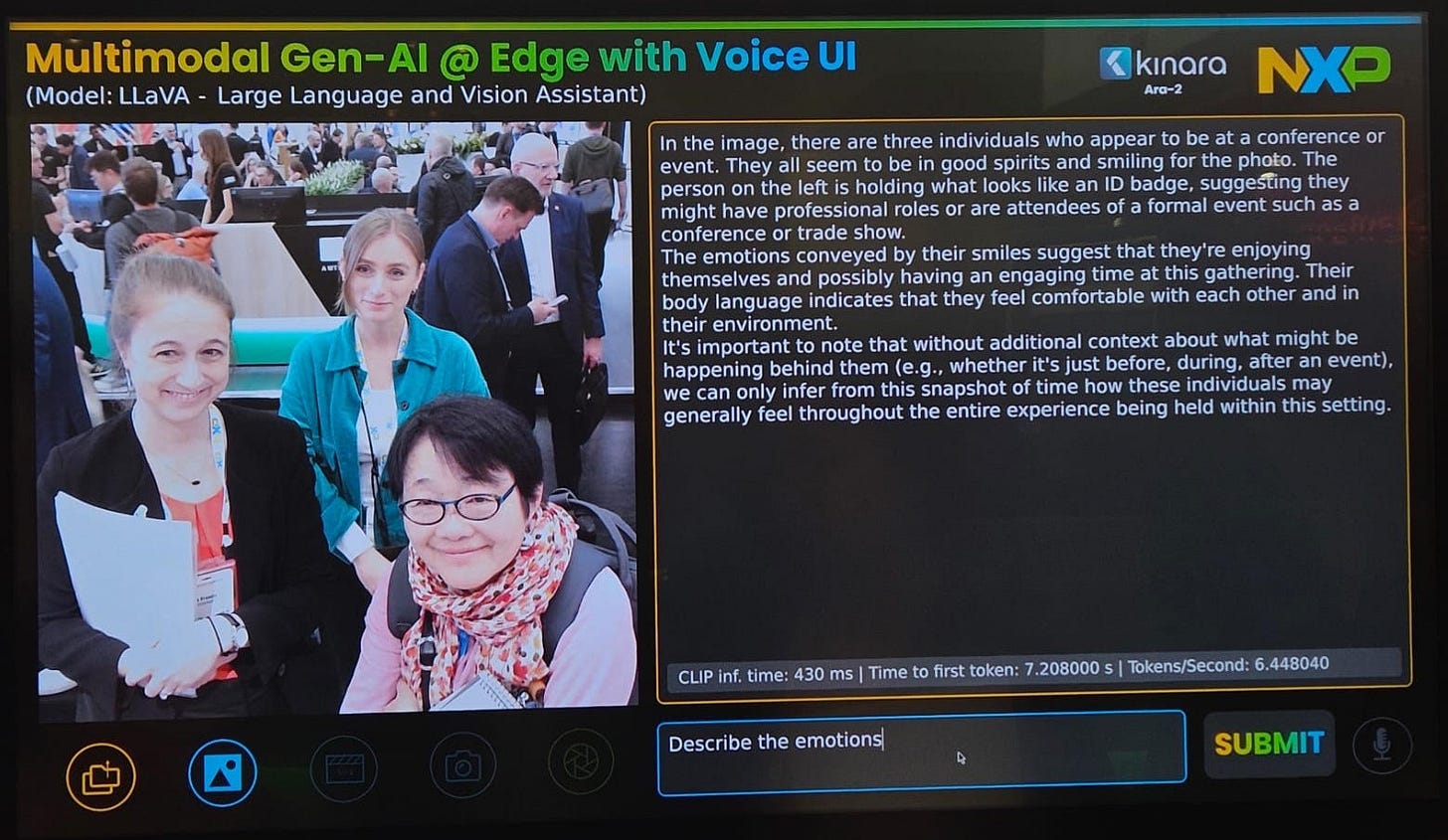

Another demo (shown in the picture below) demonstrated AI’s ability to do video and image analysis. AI described in text what it observed and deduced from an image shown. The demo used LLaVA on NXP’s i.MX 95 and Kinara Ara-2.

It’s unclear how useful either of such generative AI examples would be in real-life AI applications. However, Ali Ors, director of AI ML strategy and technologies, edge processing at NXP, would be the first to note that not all applications need to run generative AI.

He cautioned against the hype that makes people believe in AI replacing everything. That’s when AI’s uptake gets slower, he said.

However, “AI starts to get moving,” said Ors, when vendors convince customers that AI is “about saving them money in terms of feature extraction, the algorithms, the ability to automate parts that used to require the highest expertise.”

Trickle-down effect

Some AI applications are born on the edge and remain at the edge. Others are born on the cloud. At NXP, “We believe in both,” noted Ors.

When something born at the cloud trickles down to the edge, moving into an edge-friendly level, it gets deployed, said Ors. “That [trickle down] is happening faster and faster.”

When moving into generative AI, things will accelerate once you are no longer tuning the model, but tuning its output, said Ors. At that point, “the application on generative AI is seen as an easier task.” But he noted, “Below you, the iceberg keeps growing.”

Ors’ “iceberg,” is the fundamental model on which your task sits. “While your task might be changing and looking easier,” Ors noted, “you now have a bigger iceberg that keeps freezing under you.”

At that stage, the structure “propping you up with generative AI is all that software,” he said.

That’s why NXP has been committing energy and money to internally developed software enablement. The goal is to create a unified AI software tool chain that works across NXP’s product portfolio.

What to do, for example, when a customer still using old hardware faces new model requirements with new workloads? “They need the software that can bridge their hardware into the new workload,” said Ors.

Open-source community

Synaptics is one of the most vocal MCU/MPU suppliers advocating the open-source approach. It seeks to enable customers to advance their edge AI embedded applications and systems in the open-source community.

42 Technology’s Jeffs told us, “Synaptics’ open development environment allowed companies like ourselves and Arcturus to develop the instrumentation in just five weeks.”

Since the launch of Astra edge-AI platform 11 months ago, Synaptics’ commitment to open-source development enabled many customers to develop Linux-based AI-enabled systems, noted Martyn Humphries, vice president, business and market development at Synaptics.

This did not escape the notice of Google.

Google, concerned with AI fragmentation, signaled approval of Synaptics eschewing proprietary software or operating systems.

Google also found that Synaptics has been developing super low-power architecture for its processors. So is Google. This mutual affinity for open software has led Google to partner with Synaptics to develop “a common open-source compiler,” explained Humphries.

The companies have been exchanging information and proceeding with AI collaborations, but details of their work are yet to be disclosed.

Given that players in the embedded market are interested in using some of the Google Platform, Synaptics sees Google as a tailwind for its Astra edge-AI platform.

AI in every MCU

Many chip suppliers see that their MCUs and MPUs will eventually contain some AI elements. But it remains to be seen how they can help application developers and system designers implement AI on their platforms.

Every MCU and MPU offered on Synaptics Atra Edge-AI platform is already “AI-native,” claimed Humphries.

This week the company unveiled a new class of MCUs — called Astra SR-Series—which run on free RTOS. The new MCUs use what the company calls a “gear-shifting approach.”

Its core comes with three distinct compute domains, which can dynamically shift compute power based on real-time system demands.

The idea for the context-aware ultra-low power operation emerged out of feedback from customers. They told Synaptics their biggest concern is the power required for AI at the edge.

AI edge devices, of course, need to be always on. But what Synaptics created was a very small proprietary compute element, which acts as a timer. By taking samples of audio and vision, and putting it into the buffer, the ultra-low power compute mode then does a comparison at the next event. This allows edge AI systems to avoid turning on a power-burning CPU.

(Source: Synaptics)

Synaptics isn’t alone promoting MCUs embedded with AI.

Ceva, an IP vendor, announced NeuPro-Nano last year. Calling it “a self-sufficient neural processing unit,” Moshe Sheier, Ceva’s vice president of marketing, made clear that this isn’t an accelerator, because its operation doesn’t need a separate CPU.

Packed inside Ceva’s NPU are signal processing, controller and AI capabilities. Sheier told us that several customers have signed up for Ceva’s NPU IP. They are now building the chips.

Meanwhile, ST has its brand new STM32N6. When it was announced, ST’s MCU chief Remi El-Ouazzane described it as “the industry’s first MCU combined with NPU.” Skeptics questioned whether ST was indeed the first in the industry to roll out NPU-enabled MCUs. ST executives responded that, at the time of the announcement, STM32NX was the first MCU – already locked and loaded with their own software tools for NPU.

All told, however, Ceva’s Sheier said the NPU market is “still a bit of wild west, where everyone wants to invent their own engine.”

Potentially, AI-enabled MCU vendors could stumble if they lack solid software tool chains.

As many system vendors search for ways to use AI, with little idea what their AI workload will be, AI is still in early days. If MCU vendors want to sell billions of AI chips, they can’t just encourage potential customers to play with or benchmark AI. They also need to make it easy to develop useful edge AI prototypes.

It’s time, ST’s Cronin quipped, for AI to transition “from homework to real work.”

Related story:

This is an illustration of why independent journalism is a bulwark against the fog of press agentry and corporate propaganda.